Zoic Studios used visual effects to recreate occupied New York and San Francisco.

What if Germany and Japan had won World War II? What would the world look like? That is the premise of Philip K. Dick’s 1963 novel and Amazon’s series, The Man in the High Castle, which is currently gearing up for its second season premiere in December.

The Man in the High Castle features familiar landmarks with unfamiliar touches. For example, New York’s Time Square has its typical billboards, but sprinkled in are giant swastika banners, images of Hitler and a bizarro American flag, whose blue stripes have been replaced with yet another swastika. San Francisco, and the entire West Coast, is now under Japanese rule, complete with Japanese architecture and cultural influences. It’s actually quite chilling.

Jeff Baksinski

Helping to create these “new” worlds was Zoic Studios, whose team received one of the show’s four Emmy nods for its visual effects work. That team was led by visual effects supervisor Jeff Baksinski.

Zoic’s path to getting the VFX gig was a bit different than most. Instead of waiting to be called for a bid, they got aggressive… in the best possible way. “Both myself and another supervisor here, Todd Shifflett, had read Dick’s book, and we really wanted this project.”

They began with some concept stills and bouncing ideas off each other of what a German-occupied New York would look like. One of Zoic’s producers saw what they were up to and helped secure some money for a real test. “Todd found a bunch of late ‘50s/early 60’s airline commercials about traveling to New York, and strung it together as one piece. Then we added various Nazi banners, swastikas and statues. Our commercial features a pullback from a 1960s-era TV. Then we pull back to reveal a New York penthouse with a Nazi solider standing at the window. The commercial’s very static-y and beat up, but as we pull back out the window, we have a very high-resolution version of Nazi New York.”

And that, my friends, is how they got the show. Let’s find out more from Baksinski…

The Man in the High Castle is an Amazon show. Does the workflow differ from traditional broadcast shows?

Yes. For example, on our network TV shows, typically you’ll get a script each week, you’ll break it down and maybe have 10 days worth of post to execute the visual effects. Amazon and Netflix shows are different. They have a good idea of where their season is going, so you can start building assets well in advance.

High Castle’s version of the Brooklyn Bridge

features a Nazi/American flag.

When we did the pilot, we were already building assets while I was going out to set. We were building San Francisco’s Hirohito Airport, the airplane that featured heavily in a few episodes and the cities of New York and San Francisco — a lot of that started before we ever shot a single frame.

It’s a whole new world with the streaming channels.

Everybody does it a little bit differently. Right now when we work on Netflix shows, we are working in shooting order, episode 105, 106, 107, etc., but we have the flexibility to say, “Okay, that one’s going to push longer because it’s more effects-heavy. We’re going to need four weeks on that episode and only two on this other one.” It’s very different than normal episodic TV.

Do you have a preference?

At the moment, my preference is for the online shows. I come from a features background where we had much longer schedules. Even worse, I worked in the days where movies had a year-and-a-half worth of schedule to do their visual effects. That was a different era. When I came into television, I had never seen anything this fast in my life. TV has a super quick turnaround, and obviously audiences have gotten smarter and smarter and want better and better work; television is definitely pushing closer to a features-type look.

Assuming you get more time with the pilots?

I love pilots. You get a big chunk of the story going, and a longer post schedule — six to eight weeks. We had about six weeks on Man in the High Castle, which is a good amount of time to ask, “What does this world look like, and what do they expect? In the case of High Castle, it was really about building a world. We were never going to create a giant robot. It was about how do we do make the world interesting and support our actors and story? You need time to do that.

You were creating a world that doesn’t exist, but also a period piece that takes place in the early ‘60s. Can you talk about that?

We started with what the normal versions of New York and San Francisco looked like in the ‘60s. We did a lot of sketch work, some simple modeling and planning. The next step was what would New York look like if Germany had taken over, and how would San Francisco be different under the influence of Japan?

Zoic added a Japanese feel to

San Francisco streets and buildings.

In the case of San Francisco, we found areas in other countries that have heavy Japanese populations and how they influence the architecture —so buildings that were initially built by somebody else and then altered for a Japanese feel. We used a lot of that for what you see in the San Francisco shots.

What about New York?

That was a little bit tougher, because if you’re going to reference back to Germany during the war, you have propaganda signs, but our story takes place in 1962, so you’ve got some 17 years there where the world has gotten used to this German and Nazi influence. So while those signs do exist, we scaled back and added normal signs with German names.

In terms of the architecture, we took some buildings down and put new ones in place. You’ll notice that in our Times Square, traffic doesn’t move as it does in real life. We altered the roads to show how traffic would move if somebody eliminated some buildings and put cross-traffic in.

You also added a little bit of German efficiency to some scenes?

Absolutely. It’s funny… in the show’s New York there are long lines of people waiting to get into various restaurants and stores, and it’s all very regimented and controlled. Compare that to San Francisco where we have people milling about everywhere and it’s overcrowded with a lot of randomness.

How much of what you guys did were matte paintings, and could those be reused?

We use a few different types of matte paintings. We have the Rocky Mountains, for example, in the Neutral Zone. Those are a series of matte paintings we did from different angles that show mountains, trees and rivers. That is reusable for the most part.

Other matte paintings are very specific. For example, in the alley outside of Frank’s apartment, you see clothes hanging out to dry, and buildings all the way down the alleyway that lead to this very crowded-looking city. Those matte paintings are shot-specific.

Then we use matte paintings to show things far off in the distance to cut off the CG. Our New York is maybe four square city blocks around in every direction. When we get down to that fourth block, we started using old film tricks — what they used to do on studio lots, where you start curving the roads, dead-ending, or pinching the roads together. There is no way we could build 30 blocks of CG in every direction. I just can’t get there, so we started curving the CG and doing little tricks so the viewer can’t tell the difference.

What was the most challenging type of effects you created for the show? Which shots are you most proud of?

We are most proud of the Hirohito Airport and the V9 rocket plane. What most people don’t realize is that there’s actually nothing there — we weren’t at a real airport and there’s no plane for the actors to interact with. The actors are literally standing on a giant set of grip pipe and crates and walking down a set of stairs. That plane looks very realistic, even super close-up. You see every bolt and hinge and everything as the actors walk out. The monorail and embassy are also cool.

What do you call on in terms of tools?

We use Maya for modeling and lighting environments and for any animation work, such as a plane flying or the cars driving. There is a plug-in for Maya called Miarmy that we used to create CG people walking around in backgrounds. Some of those shots have hundreds of extras, but it still felt a little bit thin, so we were used CG people to fill in the gaps.

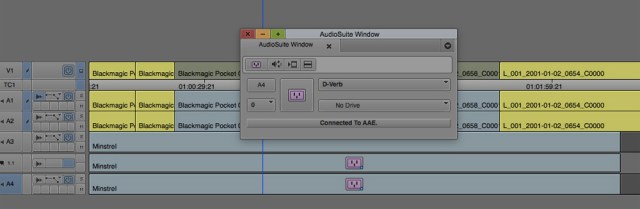

What about compositing?

It’s all Nuke. A lot of our environments are combinations of Photoshop and Nuke or projections onto geometry. Nuke will actually let you use geometry and projections in 3D inside of the compositing package, so some of our compositors are doing environment work as well.

Did you do a lot of greenscreen work?

We didn’t do any on the pilot, but did on the following episodes. We decided to go all roto on the pilot because the show has such a unique lighting set-up — the way the DP wanted to light that show — that green would have completely ruined it. This is abnormal for visual effects, where everyone’s always greenscreening.

Roto is such a painstaking process.

Absolutely. Our DP Jim Hawkinson was coming off of Hannibal at the time. DPs are always super wary of visual effects supervisors because when you come on the set you’re immediately the enemy; you’re about to tell them how to screw up all their lighting (smiles).

He said very clearly, “This is how I like to use light, and these are the paintings and the artwork.” This is the stuff I really enjoy. Between talking to him and director David Semel, and knowing that it was an RSA project, your brain immediately starts going to things like Blade Runner. You’re just listening to the conversations. It’s like, “Oh, this is not straightforward. They’re going to have a very contrast-y, smoky look to this show.”

We did use greenscreen on the rest of the episodes because we had less time. So out of necessity we used green.

What about rendering?

We use V-Ray, which is a global illumination renderer. We’d go out and take HDR images of the entire area for lighting and capture all of the DPs lights — that’s what’s most important to me. The DP set up his lights for a reason. I want to capture as much of his lighting as humanly possible so when I need to add a building or car into the shot, I’m using his lighting to light it.

It’s a starting point because you usually build a little bit on top of that, but that’s typically what we do. We get our HDRs, we bring them into Maya, we light the scene inside of Maya, then we render through V-Ray, and it all gets composited together.

Thought Gallery Channel:

Creative Master Series